… or parts of it anyway.

The European Union is generally up to lots of weird and wonderful things, one of the more esoteric being the “Tenders Electronic Daily” (TED) database. Basically, all public procurement above a certain value has to go through this database so that companies from all over the world have a fair chance of winning the contracts. Once a tender is won, the names and addresses of the winning companies are also posted. This, in the hope that Europeans will get more roads for their tax-euros and that less money disappear into the mayors cousins pocket.

The database is hosted at http://ted.europa.eu/. It’s publicly available, but you can’t obtain the data in bulk unless you pay a lot of money. This post describes how data can be scraped en masse through the web interface. I’ve written it partly to brag, partly to help you out on the off chance that you someday need to analyse these contracts (in which case I sincerely hope you stumble on this post).

A typical won tender looks like this (you need to be logged in, registration is free):

http://ted.europa.eu/Exec?DataFlow=N_list_results.dfl&Template=TED/N_result_details_data.htm&Page=1&docnumber=2005238380&StatLang=EN

http://ted.europa.eu/Exec?DataFlow=N_list_results.dfl&Template=TED/N_result_details_curr.htm&Page=1&docnumber=2005238380&StatLang=EN

Notice that there are two interesting pages per contract, one has structured data, the other has unstructured text (the “Document family” stuff is also pretty interesting, but we won’t go into that here). Also note the “docnumber” url parameter. It actually turns out to be composed of two parts, the year (2005 for this particular contract) and an integer (238380). The integer part starts in January from 000001 and is then incremented as contracts accrue through the year. There are very few holes in this sequence, although it seems a few documents are redacted each year. The problem now looks as simple as determining the maximum docnumber for each year and wade through all of them, downloading both data and text parts, right?

The login-requirement will foil you unfortunately — to get at a contract more than a few weeks old, you need to be logged in. Being considered as “logged in” by TED turns out to entail posting a valid JSESSIONID cookie. The browser obviously accomplishes this automatically when you interact with the web site, but replicating it in a scraper proved to be extraordinarily difficult — in fact, I spent the better part of a week looking at WireShark traces before I managed to reverse engineer the process. There are three steps:

- Post username and password to

http://ted.europa.eu/eLogin

- Simulate a search by posting to

http://ted.europa.eu/ExecSessionVariables

- Simulate browsing the search result by getting

http://ted.europa.eu/Exec?DataFlow=ShowPage.dfl&TableName=TED_EN&Template=TED/N_result_list.htm&Page=1&toconf=yes

Any sane login process would obviously stop after the first step, but for some reason you have to built state for your session variable on the server before you are allowed to retrieve random documents. The exact nature of the search being simulated is completely irrelevant, I just use a giant string mined from a random WireShark trace. The web site is built on some arcane Java stack — the convolutedness of which must be pretty amazing (posting incomplete requests will net you a stack trace that also reveals the supplier, who shall go unnamed here). Below, I’ve posted a C# class that will do the right thing. Note the gotcha that HttpWebRequest will not absorb cookies received during posts, so you have to record these yourself.

Once you can get valid session ids, getting all the data is pretty straight forward, although it will probably take a while. I highly recommend requesting gzipped data from the EU server (by including “Accept-Encoding” – “gzip” in the header) as it will drastically cut down on bandwidth usage. You can safely have multiple scrapers hammering the website concurrently, I found 10 to be a good number. Note that the servers are apparently rebooted around midnight CET each day during which time requests tend to fail.

Remember that you can browse the contract information on Google Maps here: http://tedbot.itu.dk/

And the code:

using System;

using System.Text;

using System.Net;

using System.IO;

using System.Net.Security;

using System.Security.Principal;

namespace TED.Scraper

{

class Authenticator

{

static string userid = "username";

static string password = "password";

public enum MethodType { Get, Post };

static string extSearchq = @"Name=statisticMode%40fulltext_textfield"+

"%40OJ_textfield%40country_textfield"+

"%40place_textfield%40contract_textfield"+

"%40procedure_textfield%40document_textfield"+

"%40regulation_textfield%40CPV_textfield%40NUTS_textfield"+

"%40publication_textfield%40docnumber_textfield%40datedoc_textfield"+

"%40deadline_textfield%40type_author_textfield%40name_author_textfield"+

"%40heading_textfield%40activity_textfield%40fulltext_textfield_hid"+

"%40OJ_textfield_hid%40country_textfield_hid%40place_textfield_hid"+

"%40contract_textfield_hid%40procedure_textfield_hid%40document_textfield_hid"+

"%40regulation_textfield_hid%40CPV_textfield_hid%40NUTS_textfield_hid"+

"%40publication_textfield_hid%40docnumber_textfield_hid%40datedoc_textfield_hid"+

"%40deadline_textfield_hid%40type_author_textfield_hid%40name_author_textfield_hid"+

"%40heading_textfield_hid%40activity_textfield_hid%40docLang%40maxRow%40SelRetrieval" +

"%40FTIndex%40SearchFrom%40ExpertQry%40op1%40op2%40Query%40ErrorMes%40AdviceMes&Value=No" +

"%40null%40null%40null%40null%40null%40null%40null%40null%40null%40null%40null%40null%40null" +

"%40null%40null%40null%40null%40null%40null%40null%40null%40null%40null%40null%40null%40null" +

"%40null%40null%40null%40null%40null%40null%40null%40null%40null%40null%40EN%4025%40OJ%2CND%2CTI" +

"%40TEDINDEX_ARCHIVE2%40extended%40null%40AND%40AND%40cs_type%3Adb+%40null" +

"%40null&Language=EN&Redirect=Exec%3FDataFlow%3DShowPage.dfl%26TableName%3DTED_EN" +

"%26Template%3DTED%2FN_result_list.htm%26Page%3D1%26toconf" +

"%3Dyes&ErrorMes=null&AdviceMes=null&RedirectError=Exec" +

"%3FDataFlow%3DShowPage.dfl%26Template%3DTED%2Fextended_search%26BANNER%3DEXT";

public static string GetAuthenticatedSessionId()

{

CookieContainer cc = new CookieContainer();

DoHttpRequest("http://ted.europa.eu/eLogin",

MethodType.Post,

true,

String.Format("USERID={0}&PASSWORD={1}", userid, password),

cc);

DoHttpRequest("http://ted.europa.eu/ExecSessionVariables",

MethodType.Post,

true,

extSearchq,

cc);

DoHttpRequest("http://ted.europa.eu/Exec?" +

"DataFlow=ShowPage.dfl&TableName=TED_EN&Template=TED/N_result_list.htm&Page=1&toconf=yes",

MethodType.Get,

false,

null,

cc);

string sessionid = cc.GetCookies(new Uri("http://ted.europa.eu"))["JSESSIONID"].Value;

return sessionid;

}

private static void DoHttpRequest(string url, MethodType mtype,

bool isFormEncoded, string requestString, CookieContainer cc)

{

HttpWebRequest req = (HttpWebRequest)WebRequest.Create(url);

req.Method = mtype.ToString();

req.CookieContainer = cc;

req.AllowAutoRedirect = false;

req.AllowWriteStreamBuffering = true;

req.AuthenticationLevel = AuthenticationLevel.None;

req.ImpersonationLevel = TokenImpersonationLevel.None;

req.UserAgent =

"Mozilla/5.0 (Windows; U; Windows NT 5.1; en-GB; rv:1.9b4) Gecko/2008030714 Firefox/3.0b4";

req.Proxy = null;

if (isFormEncoded)

{

req.ContentType = "application/x-www-form-urlencoded";

req.Expect = null;

byte[] reqData = Encoding.UTF8.GetBytes(requestString);

req.ContentLength = reqData.Length;

Stream reqStream = req.GetRequestStream();

reqStream.Write(reqData, 0, reqData.Length);

reqStream.Close();

}

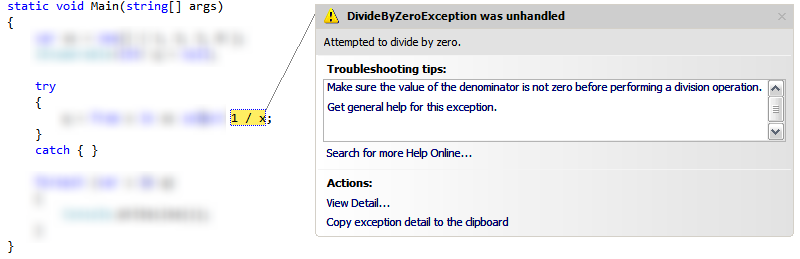

try

{

HttpWebResponse response = (HttpWebResponse)req.GetResponse();

if (isFormEncoded)

{

// seems that cookies received in post are not saved to collection

req.CookieContainer.Add(new Uri("http://ted.europa.eu"), response.Cookies);

}

response.Close();

}

catch (Exception e)

{

throw;

}

}

private static void AddRequestString(HttpWebRequest request, string requeststring)

{

byte[] reqData = Encoding.UTF8.GetBytes(requeststring);

request.ContentLength = reqData.Length;

Stream reqStream = request.GetRequestStream();

reqStream.Write(reqData, 0, reqData.Length);

reqStream.Close();

}

}

}