This post will demonstrate the feasability of face detection in web cam feeds grabbed by a flash/flex application. It’s inspired by a prototype of Martin Speelmans, informed by my work with Flash and web cams and my experiments with OpenCV. The basic premise is that a flex application running in a users browser grabs web cam shots, compresses them and sends them to a server. The server decompresses the picture, does face detection on it, and sends the result back to the client. All fast enough to give the user a relatively smooth experience. A fairly impressive Actionscript 3 face detection implementation exists, but my guess is that — barring a quantum leap in Adobe compiler technology — it’s gonna be a few years before fast, reliable Actionscript 3 face detection is feasible in Flash Player. Silverlight may be a different story, but it doesn’t support web cams yet.

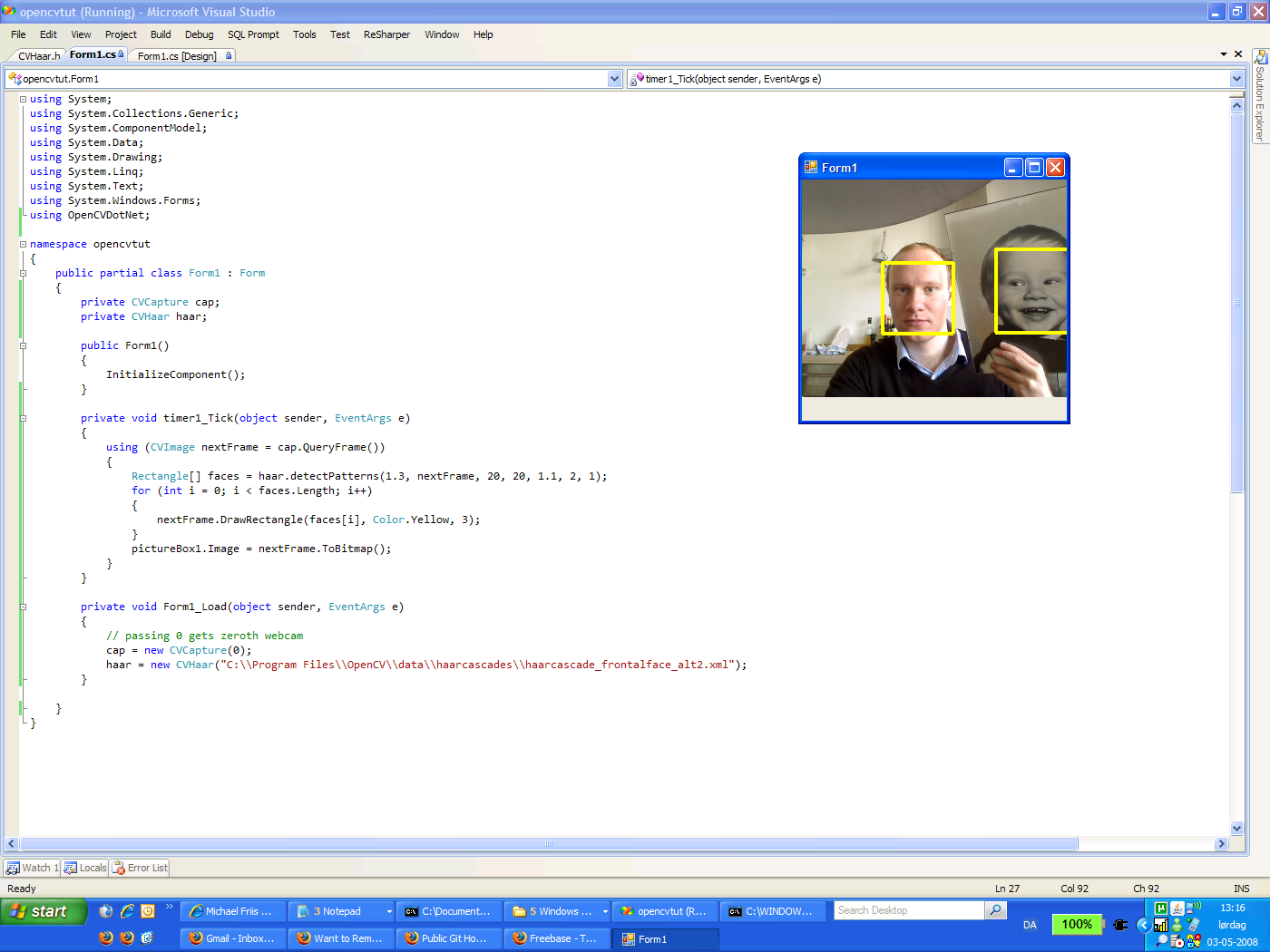

The first prototype was implemented in Flash since I refused to program in XML (which you tend to to in Flex) as a matter of principle. When it turned out that Flash doesn’t support web services, I relented and changed to Flex. The parameter marshalling code that Flex Builder generates turned out to be too slow for low latency operation tough and was dumped in favour of a HttpService. The server part is implemented in ASP.Net, with C# calling EmguCV wrapped OpenCV. The code is at the bottom of the post.

The client goes through the following steps:

- Setting up the UI, getting the web cam running, setting up a timer to tick three times a second.

- On timer ticks, a shot is grabbed from the web cam.

- The picture is scaled to 320×240 pixels. The scaling is handled is by native library code and takes a few milliseconds.

- The picture is converted to grayscale. OpenCV facedetection only operates on one colour channel anyway and grayscale images compress better. The colour transformation is handled by more native library code and also takes just a few milliseconds.

- The picture is then PNG encoded. An encoder lives in the

mx.graphics.codec namespace and at ~150ms for a 320×240 pic, it’s pretty fast. The resulting bytearray is around 60kb.

- The bytearray is base64 encoded which takes around 100ms.

- Finally the bytearray is sent to the server along with a sequence number. The server returns the sequence number so that the client can make sure it doesn’t use old, out-of-sequence results.

Upon receipt of a post, the server does the following:

- The request string is base64 decoded to a bytearray.

- A bitmap is created from the bytearray and made into a EmguCV grayscale image.

- The faces are indentified with a Haar Cascade detector

- The results, along with some performance information and the sequence number, are returned to the client as XML

Total server-side time is around 150ms with almost all of it spent in the face detection call. The client can do whatever it want’s with the face information — my demonstration projects a bitmap on top of detected faces in the video feed. Total roundtrip time is less than half a second at three frames a second on a non-bandwith constrained connection in the same city as the server. This is probably enough to give relatively passive users the illusion of real-time face detection. I’ve also gotten positive reports from testers in the US where latency is higher.

I experimented with zlib compression instead of PNG encoding but the difference in both space and time was marginal (PNG uses zlib so that shouldn’t be too surprising). Instead of grayscaling the image, I also tried yanking out just one colour channel from the 32bit RGBA pixels, but iterating 320x240x4 bytearrays in Actionscript was dead-slow. Ditching http in favour of AMF and using AMF.Net or FluorineFx on the server may improve latency further.

What is point if all this you ask? A haven’t the foggiest idea really, but it’s good fun. You can certainly use it to create very silly facebook applications. You could conceivably use it to detect the mood of your users by determining whether they are smiling or not. Is this usable in a production scenario? Hard to say. The current server is a 3.5GHz Celeron D — a right riceboy machine. With one user hitting it, the CPU is at 20-30% utlization, suggesting it’ll tolerate a few concurrent users at most. Getting a proper machine and installing Intel Performance Primitives would probably help a lot. If you really meant it, you could run the OpenCV part on a PS3 or on a GPU.

Download the important parts of the code here.