Wikileaks Iraq wardiaries data quality

til;dr: The Wikileaks Iraq data is heavily redacted (by Wikeleaks presumably) compared to the Afghanistan data: Names — of persons, bases, units and more — have been purged from the “Title” and “Summary” column-texts and the precision of geograpical coordinates have been truncated. This makes both researching and visualizing the Iraq data somewhat difficult.

(this is a cross-post from the Ekstra Bladet Bits blog)

Ekstra Bladet received the Iraq data from Wikileaks some time before the Friday 22. 23:00 (DK-time) embargo. We knew the dump was going to be in the exact same format as the Afghanistan one, so loading the data was a snap. When we started running some of the same research-scripts used on the Afghanistan data, it quickly became clear that something was amiss however. For example, we could only find a single report mentioning Danish involvement (namely the “Danish Demining Group”) in the Iraq War. We had drawn up a list persons, companies and places of interest, but searches for these also turned up nothing. A quick perusal of a few sample reports revealed that almost all identifying names have been purged from report texts.

Update: It turns out that Ekstra Bladet got the redacted version of the from Wikileaks. Apparently some 6 international news organisations (and the Danish newspaper Infomation) got the full, unredacted data. They won’t be limited in the ways mentioned below.

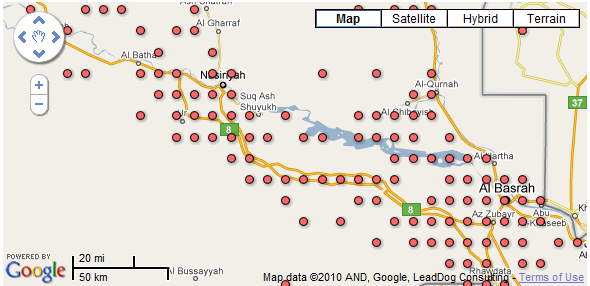

This caused us to temporarily abandon the search for interesting individual events and instead try to visualize the events in aggregate using maps. I had readied a heatmap tile-renderer which — when fed the Afghanistan data — produces really nice zoomable heatmaps overlayed on Google Maps. When loaded with the Iraq data however, the heatmap tiles had strange artifacts. This turns out to be because the report geo-coordinate-precision has been truncated. We chose not to publish the heatmap, but the effect is also evident on this Google Fusion-tables based map of IED-attacks (article text in Danish). The geo-precision truncation makes it impossible to produce something like the Guardian IED heatmap, demonstrating IED-attacks hugging roads and major cities.

We did manage to produce some body count-based articled before the embargo. Creating simple infographics showing report- and attack-frequency over time is also possible. Looking at the reports, it is also fairly easy to establish that Iraqi police mistreated prisoners. Danish soldiers are known to have handed over prisoners to Iraqi police (via British troops), making this significant in a Danish context. We have — however — not been able to use the reports to scrutinize the Danish involvement in the Iraq war in the same depth that we could with the Afghanistan data.

We initially thought the redactions were only for the pre-embargo data dump and that an unredacted dataset might become available post-embargo. That seems not to be the case though, since the reports Wikileaks published online after the embargo are also redacted.

I’m not qualified to say whether the redactions in the Iraq reports are necessary to protect the individuals mentioned in them. It is worth noting that the Pentagon itself found that no sources were revealed by the Afghanistan leak. The Iraq-leak is great ressource for documenting the brutality of the war there, but the redactions do make it difficult to make sense of individual events.